Polycam-ustreamer

Why

Many IP cameras are built around variants of Raspberry Pi . Especially with its CSI/MIPI

. Especially with its CSI/MIPI camera it is a powerful

and relatively inexpensive system, much more flexible than anything else on the market. Features can be easily added on demand.

camera it is a powerful

and relatively inexpensive system, much more flexible than anything else on the market. Features can be easily added on demand.

USB UVC webcams are another flexible kind of optics. They come in all sorts of shapes and capabilities.

The most interesting ones are:

webcams are another flexible kind of optics. They come in all sorts of shapes and capabilities.

The most interesting ones are:

- cheapest generics, usually 640x480, priced from $2 a pop

- endoscope-class, usually also 640x480, some 720p or even 1080p, inexpensive, diameters of 6-8mm, easy to build into tight spaces (toolheads)

- USB microscopes, cheapest ones also 640x480, often with surprisingly good optics

These cams can be used for many sorts of optical supervision/surveillance, alignments, and even machine vision.

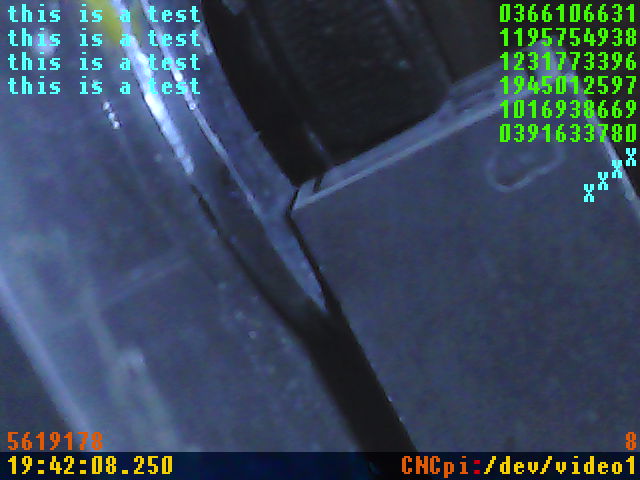

An example can be a CNC machine, with one cam surveying the overall workspace, another on the toolhead looking at the tool-workpiece interaction,

yet another on the same toolhead but looking straight down (and possibly being a microscope) for optical alignments and workpiece measurements/coordinate

acquisition, one more on the side of the machine to inspect the tool for dimensions and wear...

Streaming background

Streaming of video consists of delivery of image frames from camera sensor to the user display (or machine vision software).

This consists of the sensor-encoder pathway and encoder/server-client pathway.

USB streaming quirks

USB cams are operated through their /dev/videoX device, via the Video4Linux API.

API.

The camera gets opened, set up via a series of ioctl calls. Then the data are acquired either by requesting a single frame, or by switching on

frame streaming.

calls. Then the data are acquired either by requesting a single frame, or by switching on

frame streaming.

When a streaming server is started, it opens the assigned camera and tells it to stream. It receives the images typically in a rotating set of

hardware buffers - even if no client is attached to the server.

When streaming, the camera reserves a fair amount of bandwidth on the USB bus, for isochronous transfers. Several cams running on individual

streaming servers can therefore quickly run out of available USB bandwidth. This is especially annoying with smaller computers with weaker USB

interfaces, not built for this kind of load. Raspberry Pi up to v3 is an example - two streams of non-negligible resolution and the whole USB

subsystem starts failing.

Streaming of multiple cams therefore brings rather heavy issues - especially as the cams are often not needed all at once, in most cases

selecting just one does the job well enough.

No software however appears to hot-select/hot-swap cams on external demand, without interrupting the stream.

stream formats

Data formats are commonly identified by FourCC codes, four-character codes.

codes, four-character codes.

stream formats, sensor-to-host

The best known encoding, used with virtually all framebuffers, is RGB . Each pixel has a triplet of values for red/green/blue brightness.

. Each pixel has a triplet of values for red/green/blue brightness.

Sensors however often use YUV , where, instead of red/green/blue, each pixel has a triplet of intensity (Y) and two chroma values, describing the color (U/V, or Cb/Cr).

Here the human eye's lower color resolution compared to brightness resolution can be leveraged to reduce the amount of data by chroma subsampling

, where, instead of red/green/blue, each pixel has a triplet of intensity (Y) and two chroma values, describing the color (U/V, or Cb/Cr).

Here the human eye's lower color resolution compared to brightness resolution can be leveraged to reduce the amount of data by chroma subsampling .

.

With camera sensors, 4:2:2 subsampling is often used. Each pair of pixels then contains two values for intensity (one each), and one shared value for color (as U/V pair).

The data volume is instantly reduced by a third.

The frame formats come in two families:

- planar - Y,U,V data stored separately in adjanced blocks

- packed - Y,U,V data stored together for each "macropixel" (pixel group with data); suitable for RGB, 4:4:4, 4:2:2, 4:1:1

Common planar format is the NV21, used for Android camera previews. Here the 4:2:0 image is sent as an array of Y values, followed

by array of interleaved U/V values.

Most cameras however provide data as packed, either in individual pixels containing all data (RGB888, RGB565, YUV444...)

or in "macropixels", groups of pixels with some data (typ. color) shared across 2, 4, 8, or more pixels.

The most important pixel formats are:

bytes/

format subsampling pixel pixels per color info

RGB888 4:4:4 3 1 every pixel has full RGB triplet, full 8bit color depth; also called RGB24

RGB565 4:4:4 2 1 every pixel has full RGB triplet, reduced color depth; also called RGB16

YUV444 4:4:4 3 1 every pixel has full YUV triplet

YUV422 4:2:2 2 2 color shared across even/odd horizontal pixel pairs

YUV411 4:1:1 1.5 4 color shared across horizontal quads of pixels

YUV420 4:2:0 1.5 4 color shared across 2x2 pixel squares

Other family of pixel formats is derived from the RGB model, using the Bayer filter arrays. Here, the "macropixels" are

composed of arrays of

arrays. Here, the "macropixels" are

composed of arrays of

fourcc BA81 GBRG GRBG RGGB BG10 GB10 BA10 RG10

pixels BG GB GR RG BG GB GR RG

pixels GR RG BG GB GR RG BG GB

Sensor data come from the camera usually packed, in several possible formats:

- YUYV - 4:2:2 subsampling, pixel pairs with [Y1][U12] [Y2][V12] values; two bytes per pixel

- UYVY - 4:2:2 subsampling, pixel pairs with [U12][Y1] [V12][Y2] values; two bytes per pixel

- RGB565 - 4:4:4 subsampling, individual pixels with [R:5/G:3][G:3/B:5] values, three numbers packed into two bytes; two bytes per pixel

- RGB888 - 4:4:4 subsampling, individual pixels with [R][G][B] values; three bytes per pixel; most common for display framebuffers

- compressed - some cameras have hardware compression; common are MJPEG and H.264; no raw pixel data here

- per-frame compression - JPEG/MJPEG, each frame is a separate entity

- full-stream compression - H.264, MPEG4..., leveraging interframe differences for higher compression at high hardware load cost

- streaming software usually just hands over the frames/stream to clients, without further processing

- inability to insert overlays

There is a plethora of frame and pixel formats. Best list on hand is the V4L2 header, /usr/include/linux/videodev2.h.

stream formats, server-to-clients

Video streaming comes in several different formats, each suitable for different use cases.

- raw image format

- uncompressed frames

- uncompressed frames

- VERY high bandwidth demands

- full sensor data without loss, no compression artefacts

- may be preprocessed (eg. debayered into YUV) or left as raw pixel values

- lossless compression in some cases

- Motion JPEG

, MJPEG - each frame is a separate JPEG image

, MJPEG - each frame is a separate JPEG image

- intraframe-only, lower compression ratio as no inter-frame commonalities can be leveraged

- simpler stream processing, each frame is a separate independent entity

- low hardware requirements on encoding

- wide native support in browsers

- usually as HTTP stream of individual frames, can be RTP stream

- MPEG-4

- interframe compression

- interframe compression

- high compression ratio, less than H.264

- mostly replaced by H.264

- AVC

, H.264 - interframe compression, keyframes and difference frames

, H.264 - interframe compression, keyframes and difference frames

- high compression ratio

- hardware-intensive

transport layer

The data can be transferred by several different means:

- TCP stream - higher latency, buffering, no packet loss

- RTMP

- HTTP

- MJPEG

over HTTP - the most common for smaller webcams

over HTTP - the most common for smaller webcams

- HLS

, MPEG-DASH

, MPEG-DASH , CMAF

, CMAF

- RTMPT - RTMP tunneled through HTTP

- UDP stream - low latency, vulnerable to packet loss, can be multicast

- RTP - used by WebRTC

- RTSP

- RTP+RTCP - some webcams (with H.264)

- RTP+RTCP - some webcams (with H.264)

- SRT

- codec-agnostic, opensource

- codec-agnostic, opensource

choices

camera side:

- raw - usually YUV, cheapest, host computer must do the encoding but can manipulate image, include overlays...

- compressed - host computer can just shovel data to stream, but can't do overlays without decoding; sometimes used to push high-res at reasonable framerates through limited-speed USB

- MJPEG - discrete frame compression, easy to implement, browser compatibility, cheap end of in-device compression

- MPEG4 - better compression, video stream

- H.264 - even better compression, more expensive devices

stream side:

- MJPEG - easy to implement, browser compatibility, decent demands on computer

- MPEG4 - more computationally demanding

- H.264 - even more demanding, even better compression

For lower framerates and smaller resolutions, the MJPEG is often the best choice.

formats/codecs overview

- format - the standard itself (e.g. H.264)

- codec - format implementation (e.g. OpenH264)

Formats:

- MJPEG

(motion JPEG) - since mid-1990s, for nonlinear editing; sequence of discrete frames

(motion JPEG) - since mid-1990s, for nonlinear editing; sequence of discrete frames

- MPEG-4

- H.261

, year 1988-1993 - for video over ISDN at CIF/QCIF, DCT-based, 16x16 4:2:0 macroblocks

, year 1988-1993 - for video over ISDN at CIF/QCIF, DCT-based, 16x16 4:2:0 macroblocks

- MPEG-1

(MPEG-1 part 2), year 1993 - for VHS-quality video/CD audio to 1.5 Mbps (26:1,6:1), in many products; most common 320x240, 352x240, 352x288 (SIF formats); 16x16 macroblocks; includes MP3 spec

(MPEG-1 part 2), year 1993 - for VHS-quality video/CD audio to 1.5 Mbps (26:1,6:1), in many products; most common 320x240, 352x240, 352x288 (SIF formats); 16x16 macroblocks; includes MP3 spec

- H.262

(MPEG-2 part 2) - video codec of the MPEG-2 - similar to MPEG-1, supports also interlaced video; not optimized for low bitrates; MPEG-2 decoders can play MPEG-1

(MPEG-2 part 2) - video codec of the MPEG-2 - similar to MPEG-1, supports also interlaced video; not optimized for low bitrates; MPEG-2 decoders can play MPEG-1

- MPEG-2

(MPEG-2, H.222/H.262, MPEG-2 part 2), year 1995-2013 - H.262 video codec plus MPEG-2 part 3 and part 7 audio codecs

(MPEG-2, H.222/H.262, MPEG-2 part 2), year 1995-2013 - H.262 video codec plus MPEG-2 part 3 and part 7 audio codecs

- H.263

, year 1996-2005 - low-bitrate for videoconferencing, DCT-based; baseline H.263 can be decoded by MPEG-4 decoders

, year 1996-2005 - low-bitrate for videoconferencing, DCT-based; baseline H.263 can be decoded by MPEG-4 decoders

- MPEG-4 part 2

(MPEG-4) - most commonly Simple and Advanced Simple profiles for low-res low-bitrate (phones, videoconferencing, surveillance); H.263-based

(MPEG-4) - most commonly Simple and Advanced Simple profiles for low-res low-bitrate (phones, videoconferencing, surveillance); H.263-based

- H.264

(AVC, Advanced Video Coding, MPEG-4 Part 10, MPEG-4 AVC), year 2003 onwards - block-oriented, motion-compensated integer-DCT - BluRay, HDTV, webstreaming TV, most common format (as of 2020)

(AVC, Advanced Video Coding, MPEG-4 Part 10, MPEG-4 AVC), year 2003 onwards - block-oriented, motion-compensated integer-DCT - BluRay, HDTV, webstreaming TV, most common format (as of 2020)

- Theora

, year 2004-2011 - free, with Ogg container and Vorbis audio

, year 2004-2011 - free, with Ogg container and Vorbis audio

- H.265

(HEVC, High Efficiency Video Coding) - year 2013 onwards - integer DCT/DST with variable 4x4..32x32 blocks, 25-50% better compress than H.264/AVC, second most common format (as of 2020)

(HEVC, High Efficiency Video Coding) - year 2013 onwards - integer DCT/DST with variable 4x4..32x32 blocks, 25-50% better compress than H.264/AVC, second most common format (as of 2020)

- H.266

- Daala

- AV1 predecessor

- AV1 predecessor

- AV1

- VC-3

- SMPTE standard, highres postprod video, independent frames, similar to MJPEG

- SMPTE standard, highres postprod video, independent frames, similar to MJPEG

- VP9

container formats

Comparison of video container formats

Video stream is often present together with audio stream or other video streams, metadata, subtitles, program guide, etc., sometimes standalone as the only component in the container.

The different data types get multiplexed together in a container format .

.

Container formats are used for combining several different chunks of information together; e.g. a .jpg image is a JFIF container with JPEG data as a chunk inside.

In video, most common formats are:

- ASF (Advanced Systems Format), by Microsoft, for WMV and WMA streams, by Microsoft

- IFF, precursor of RIFF

- RIFF (Resource Interchange File Format)

- AVI (Audio Video Interleave), by Microsoft; older, rich support of formats/codecs

- WAV (Waveform Audio File Format), by Microsoft

- MKV (Matroska) - open format

- MPEG-TS (MPEG transport stream), for digital broadcasting and unreliable media

- MP4 (MPEG-4 Part 14) - rich support of codecs (incl. MJPEG); DO NOT confuse with MP4 aka MPEG-4 Part 2, one is container the other is compression/format

- Ogg, for Vorbis/Opus audio and Theora video

Common "naked" streams are eg. WMA/WMV and MP3.

To play a file, the player has to understand both the container format and the codecs used inside. If a stream codec is not supported,

only video or audio can be played with the other missing.

How

Raspberry Pi was chosen as a platform for its availability and cost.

An opensource streaming software was stumbled upon. Low latency and hardware acceleration.

The compression is accelerated via OpenMAX (OMX) API of the Raspberry Pi's GPU.

(OMX) API of the Raspberry Pi's GPU.

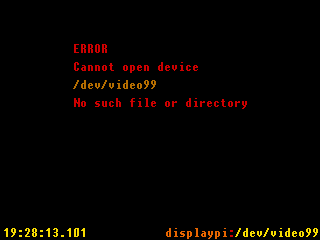

The µstreamer (ustreamer) was found to support hotplugging of the cameras.

In case the /dev/videoX it is connected to cannot be opened, a blank "NO SIGNAL" image is streamed instead. Meanwhile the

main stream thread is attempting to reconnect.

(ustreamer) was found to support hotplugging of the cameras.

In case the /dev/videoX it is connected to cannot be opened, a blank "NO SIGNAL" image is streamed instead. Meanwhile the

main stream thread is attempting to reconnect.

This behavior was leveraged for multiple camera streams selection. The request rewrites the device name, then the frame acquisition loop is exited

and reconnection is triggered.

The reconnection takes roughly 500 milliseconds. Most of the time is between the IOCTL call for streaming enable and the

moment when select() starts actually getting some data.

code architecture

ustreamer is a multithreaded software. The important threads are:

- http - handles the connections

- stream - connects to camera device, sets up the parameters, then reads frames as they come in

- workers - several threads, taking raw frames from the cam and handling the compression; allows spreading the load over multiple CPU cores

The individual source files handle different subsystems:

config.h

! device.c/h open/close/setting/ioctls of /dev/video

encoder.c/h

gpio.h raise/lower a GPIO to profile code

logging.c/h output logs

main.c

options.c/h commandline options

picture.c/h image buffer copy and compare (for identical frame drop)

process.h comm to parent process, process name

! stream.c/h main loop for the read-compress-output

threading.h

tools.h

xioctl.h defines for ioctls

http:

base64.c/h base64 enc/dec

blank.c/h read JPEG image to the "no signal" static frame

mime.c/h guess MIME type from extension

path.c/h simplification of URL paths with doubledots etc.

! server.c/h HTTP server connections, request handling, stream handling; leverages libevent2

static.c/h static page definitions - blank image, main page

unix.c/h unix sockets

uri.c/h parameter extraction from parsed HTTP requests; bool and strings

http/data:

encoders/cpu:

encoder.c/h basic nonaccelerated compression - simple to understand

encoders/hw:

encoder.c/h pass-through for already-compressed data from cam - simplest but useless for overlays

huffman.h

encoders/omx:

component.c/h

encoder.c/h OpenMAX accelerated compression

formatters.c/h state/error codes to strings

The threads are communicating through shared data structures.

Some structs have pairs of static (where setups happen) and runtime ("run") for live data related to the connection. The runtime one is usually a child element.

The bulk of modifications is in stream.c and the http/server.c.

important functions

- stream.c

- stream_loop - the main loop handling video frame acquisitions; has endless loop starting with cam device opening, then config, then reading frames

- stream_init_loop - at beginning of stream_loop, calls _stream_init repeatedly until it succeeds

- _stream_init - calls device_open and device_switch_capturing

- _worker_thread - multiple threads; takes the raw frame, optionally applies overlays, compresses it, moves it to struct where the http streaming picks it up

- additional:

- draw_overlay - texts (and optional graphics) to draw on the camera frames

- blankbuf_draw - texts (and optional graphics) to draw on the blank frame

- http/server.c

- http_server_listen - handles callback events

- http_callback_<function> - HTTP-invoked functions

- device.c

- device_open - opens the /dev/videoX device

- device_select - select() call on currently open video device, waits for the frame to come in; called from stream_loop

gotchas

The makefile does not take in account the dependencies on header files. Change a struct in eg. device.h/device.c, recompile,

and the binary will segfault somewhere where the ol' compiled .o files expect the old struct data positions. Oopsies.

make clean and a little time solves this.

The assert() call is positive on evbuffer_add_printf and negative on ebuffer_add.

- assert(evbuffer_add_printf(buf,"ERROR OPENING FILE '%s': %s\n",fn,strerror(errno)));

- assert(!evbuffer_add(buf, (void *)EXPOSED(picture->data), EXPOSED(picture->used)));

Note the "!"!!!

dirty/todo

Many of the modifications rely on global variables instead of their data being nicely packed into structs.

This will be cleaned up Sometime Later.

options added to ustreamer

- -C <filename>, --camsfile <filename> - file for the /cams URL, plain HTML or executable script generating the page; use eg. the prepared camspage.sh

- -F </dev/fbX>, --framebuffer </dev/fbX> - framebuffer for the "local echo"; /dev/fb1 for small SPI TFT LCDs on raspi

- --raspicam-rightmost - overwrite rightmost pixel (or two pixels) with the preceding pixel value; for raspi camera which tends to make the rightmost pixel white

- rightmost pixel is well-visible, on integrated black frame even the second rightmost accumulates more brightness than it should

- todo: select of one or two columns (repeated option?)

- todo: per-device setting, or maybe autodetection which one is the CSI/MIPI raspicam

added makefile options

make <option>=1 <anotheroption>=0 ... (all listed options enabled by default)

- compression

- WITH_OMX - enable OMX hardware compression (Shad: changed to enabled by default, was present originally)

- server

- WITH_HTTP_GETCAMS - enable /cams URL, --camsfile option, for a script generating "homepage" with cams list

- HTTP_GETRAW - enable /snapshotraw URL, which gives a raw YUV frame without overlays and compression artefacts

- WITH_UNSAFE_DEFAULTS - change server binding from 127.0.0.1 to 0.0.0.0 by default

- DEFAULT_PORT=8000 - set local server port, 8000 by default

- frames

- WITH_BLANKBUF - enable blank buffer, for text overlays with messages for "no signal" frames

- local echo

- WITH_FB - enable framebuffer, --framebuffer option, for "local echo"

- dev

- WITH_HTTP_EXIT - enable /exit URL, which stops the server and exits it (with zero response on HTTP call); for restart after software update, development

- TEST_OVERLAY - more overlay text on the frames, with some random numbers, to show activity

URLs

- /stream - MJPEG stream itself

- /snapshot - JPEG snapshot, single stream frame

- /snapshotraw - YUV snapshot, single unannotated raw frame as-is from the cam

- /cmd - command input

- /cmd?vd=X - set video input to /dev/videoX

- /next - next camera, in order of /dev/videoX sorted by number

- /prev - previous camera

- /state - JSON of server state (added: IP addresses of clients)

- /cams - interface with stream and camera selection

- /favicon.ico - serves favicon file

related tricks

remote framebuffer screenshot

ssh $SERVER fbgrab -d /dev/fb1 /tmp/x.png

scp $SERVER:/tmp/x.png $TARGETFILE

v4l2loopback, linux

https://github.com/umlaeute/v4l2loopback

A kernel module that creates virtual video devices, /dev/videoX loopback /loop device

/loop device interfaces.

interfaces.

IP camera to virtual webcam, Windows

Several choices, usually rely on DirectShow /Media Foundation

/Media Foundation (Microsoft Media Foundation, MMF) framework. [ref]

(Microsoft Media Foundation, MMF) framework. [ref]

Virtual camera sources are created, usually by installing a DLL file.

Virtual cams are usually DirectShow devices. These work with DS-using software, but MMF-based software won't see them.

Not all programs support the desired camera interface type. Eg. Skype (app form) does not see them, while Skype (desktop form) does.

Irfan View in its "select scan/TWAIN source" menu offers local USB webcams but not the virtual ones. Windows 10 "Camera" app

does not see them as well.

AMCap has no problems.

Beware of 32/64 bit programs, they seem to require different versions of the running DLLs. Both are usually installed simultaneously.

shenyaocn camera bridge

https://github.com/shenyaocn/IP-Camera-Bridge

shows as "IP Camera Bridge Plus", behaves nicely, does not support HTTP redirect responses

OBS Studio

Open Broadcaster Software , with a virtual cam DirectShow plugin

, with a virtual cam DirectShow plugin

Files

CAUTION: preliminary, not cleaned up, not release-ready, raw code for raw nerves

scripts

- [link?:f|scripts/camrotate.sh] - finds first raspi board camera (whose v4l2-ctl -D output contains "platform:bcm2835-v4l2"), applies horizontal and vertical flip

- [link?:f|scripts/getpicam.sh] - returns the /dev/videoX driver assigned to onboard camera

GPIO controls

The display comes

- [link?:f|scripts/handlebuttons.py] - python script to handle GPIO-attached buttons (also with HTTP server with redirect and keypress simulation)

- so far only one function - switch to next camera

- [link?:f|scripts/handlebuttons-simple.py] - python script to handle GPIO-attached buttons (buttons only)

- [link?:f|scripts/handlebuttonsloop.sh] - run handlebuttons.py, restart it with a little pause if it exits

- [link?:f|scripts/restartbuttons.sh] - kills handlebuttons.py via pgrep, so handlebuttonsloop.sh restarts it (for easier development)

Todo

- better software layout documentation

- added-code cleanup (MAJOR!)

- EXIF for the JPEGs

- with precision timestamp, server name, cam device path

- with possible signing, serial hashing...

- hot-setting resolutions

- selecting next-available if unsupported requested

- optional cropping of 4:3 frames to 16:9 (eg. 640x480 to 640x360)

- some softwares (Skype) crop 4:3 cams

- acquire the cam frame, memcpy the central region to beginning, do overlays, call compression

- per-cam setting remembered across switching

- dev structure in array, pointer in stream structure into array

- snapshot in JPEG with option for no overlays

- snapshots in different resolution

- for snapshot and snapshotraw

- framebuffer device screenshot

- via cams-like call, get PNG via fbgrab

- user-definable external scripts to run (like cams, map to /path)

- reserved list of cams to not connect to

- eg. faulty, or with another daemon running

- possibly with aliases, eg. to shmem structure

- mmapped shared buffers, so external processes can see/share fb (eg. machine vision)

- access to mmapped shared buffers, so external processes can feed "virtual cameras"

- eg. machine vision reading a display, so the display can be looked at remotely without competing for the video device

- machvis process reads device, mirrors frame to shmem structure, handles data; polycam reads shmem structure, waits for framecount increase, sends frame like it comes from ordinary webcam

- shmem files acting as sort of framebuffers

- maybe support for droidcam protocol? (different than http!) [ref]

- benchmark memcpy to see how demanding is having separate frames buffered for advanced operations (cropping, screen-sharing, faster raw-frame access...)

- memcpy hwbuf[] to separate array

- possibly remember last n frames

- motion detection, averaging for denoising...

- memcpy acquired frame to all n frames on cam switch

- richer options for overlays

Images

OpenCV/python tricks

OpenCV uses NumPy

uses NumPy arrays, usually of uint8 type, for image handling. Pixels are stored in arrays, and can have

one discrete value (for grayscale), two (for eg. interleaved YUYV), three (for RGB/BGR/YUV/HSV/HLS/LAB/LUV...), or four (RGBA/BGRA, when alpha channel is used).

arrays, usually of uint8 type, for image handling. Pixels are stored in arrays, and can have

one discrete value (for grayscale), two (for eg. interleaved YUYV), three (for RGB/BGR/YUV/HSV/HLS/LAB/LUV...), or four (RGBA/BGRA, when alpha channel is used).

A RGB image is typically a 3-dimensional array, with [y][x][color] format, with color order of BGR, or blue-green-red.

A grayscale image is a 2-dimensional array only.

[y][x][RGB] array "a":

- a.shape = (y,x,3)

- a.ndim = 3

- a.itemsize = 1 (1-byte; 2=16-byte, 4=float32)

- a.flags

- C_CONTIGUOUS : True (C-style continuous segment)

- F_CONTIGUOUS : False (Fortran-style continuous segment)

- OWNDATA : True (owns memory or borrows from another object)

- WRITEABLE : True (can be modified)

- ALIGNED : True (aligned to hardware architecture)

- WRITEBACKIFCOPY : False

- UPDATEIFCOPY : False (copy of another array, update base array when this gets deallocated)

read grayscale from YUYV shared memory, then read barcode

read each even byte of binary frame (Y only)

raw=open('/dev/shm/imgtest.bin','rb').read(640*480*2)[::2]

convert bytearray to numpy array of uint8, reshape to [y][x]

grayscaleframe=np.frombuffer(raw,'uint8').reshape(480,640)

decode barcode

print(pyzbar.decode(grayscaleframe))

read BGR from YUYV shared memory and save as jpeg

read entire binary frame

raw=open('/dev/shm/imgtest.bin','rb').read(640*480*2)

convert bytearray to numpy array of uint8, then reshape to [y][x][depth], then convert to RGB

bgrframe=cv2.cvtColor( np.frombuffer(raw,'uint8').reshape(480,640,2), cv2.COLOR_YUV2BGR_YUY2)

save file

cv2.imwrite('/tmp/x.jpg',bgrframe)

convert BGR to YUYV bytearray (colors from even bytes only, straight drop of colors of odd bytes)

yuvframe=cv2.cvtColor( bgrframe, cv2.COLOR_BGR2YUV)

convert to linear array

yuvframe=yuvframe.reshape(640*480*3)

create target output, with 8bit unsigned

yuyv=np.zeros(640*480*2,dtype='uint8')

populate each 2nd byte of target (Y) with each 3rd byte of source

yuyv[::2]=yuvframe[::3]

populate each 4th+1 byte of target (U) with each 6th+1 byte of source

yuyv[1::4]=yuvframe[1::6]

populate each 4th+3 byte of target (V) with each 6th+2 byte of source

yuyv[3::4]=yuvframe[2::6]

convert

barr=bytearray(yuyv)

...alternatively...

barr=yuyv.tobytes()

pixel formats

- RGB: [R] [G] [B]

- BGR: [B] [G] [R]

- BGRA: [B] [G] [R] [A] (A=alpha, set to 255 during BGR2BGRA)

- BGR565: [B{5}+G{5..3}] [G{2..0}+R{5}]

- GRAY: [Y]

- YUV: [Y] [U] [V]

- YCrCb: [Y] [U] [V] (also YCC; range for UV is clamped)

- YUYV (2-pixel): [ [Y1] [U1/2] ] [ [Y2] [V1/2] ] (converts to BGR but not back)

- XYZ: [X] [Y] [Z] (CIE XYZ colorspace)

- HSV: [H] [S] [V]

- HLS: [H] [L] [S]

- Lab: [?] [?] [?]

- Luv: [?] [?] [?]

There are also plane formats with brightnesses and colors in separate arrays

- IYUV: [y][x][3] turns to [2y][x], [0..y-1] is luma, [y..2y-1] is chroma

- I420: [y][x][3] turns to [2y][x]

- YV12: [y][x][3] turns to [2y][x]

Usual number formats:

- CV_8U, uint8 - 8bit integer, 0..255

- CV_16U, uint16 - 16bit integer, 0..65535

- CV_32F, float - 32bit float, 0.0..1.0

| If you have any comments or questions about the topic, please let me know here: |

Why

Why